The more important an application and its data, the more we should pressure test it before deploying it in a real-world environment. For a hosted solution across a local area network or over the web, we may need to anticipate hundreds or even thousands of simultaneous users. To make sure our solution can handle those numbers, a best practice would be to create a test environment that can run essential processes in parallel to replicate high volume usage.

To do this effectively, we needed a tool to organize our tests, run test scripts in large series, and organize results for review. We needed a test harness or an automated testing framework

Existing Testing Software

While there are several well-known applications to organize software testing, they are generally designed for non-FileMaker development environments, bringing complexity and assumptions that don’t apply to FileMaker. And they can be quite expensive.

Our Goal

We wanted a simple-to-use test framework that was open-ended, adaptable, and well-suited to testing FileMaker applications. The obvious choice was to build it ourselves directly in FileMaker.

Our purpose was not to create the most complete test environment; instead, we wanted to build a basic testing solution with an essential functionality that our developers could then modify and refine to suit each project’s specific testing needs.

Now, if you’re a developer, keep reading, and we’ll tell you how we built it and why we made the design choices we did…

Design Overview

We built our framework around this basic testing logic: We define a Test Case, that is, a specific process to be tested with expected results. Each Test Case may have several Test Runs, representing variations in the test. Each Test Run can call up the test process any number of times simultaneously, which we call Test Instances.

This gives us a testing hierarchy: Test Case – Test Runs – Test Instances.

Each instance – each run of a test script – returns a completion flag, a pass/fail result, and any additional data we want to pass back for review within our test framework file. The test script runs on the server and can run be run in multiple instances simultaneously.

With this design, we can outline our tests, develop and call test scripts, organize teams of testers, and review results within a central file.

Using the Test Framework

First, a developer outlines a Test Case. What, specifically, do we need to test? What are the required or expected results? How many simultaneous instances do we need to be able to handle?

Test Script

With that defined, a test script can be built, either in the test framework file itself or in one of the files under development. That test script can be a parent script that calls other sub-scripts, as needed. It gathers all necessary data, performs an initial pass or fail assessment, and returns the results to the test framework.

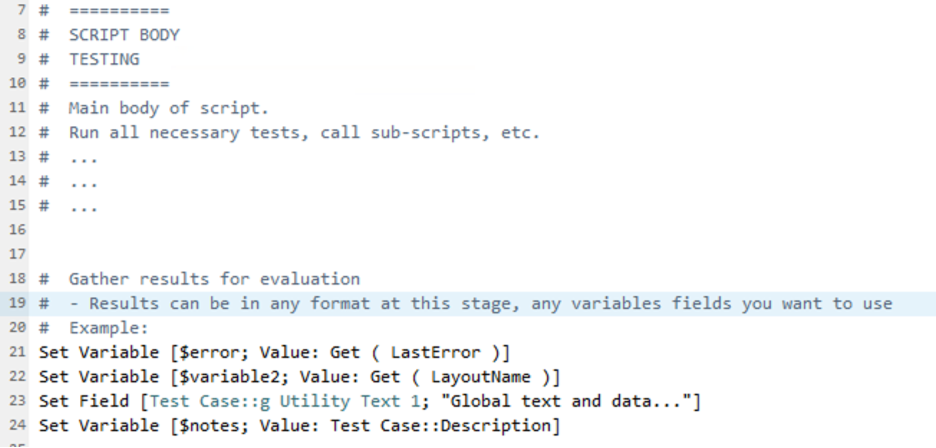

We include a thoroughly commented script named “[TEST] Sample Test Script” in the test framework file to remind our developers of the required elements in their own test scripts.

The main body of the script can perform any action or test needed, call sub-scripts, etc. – while gathering data to evaluate results and pass them back to the test framework.

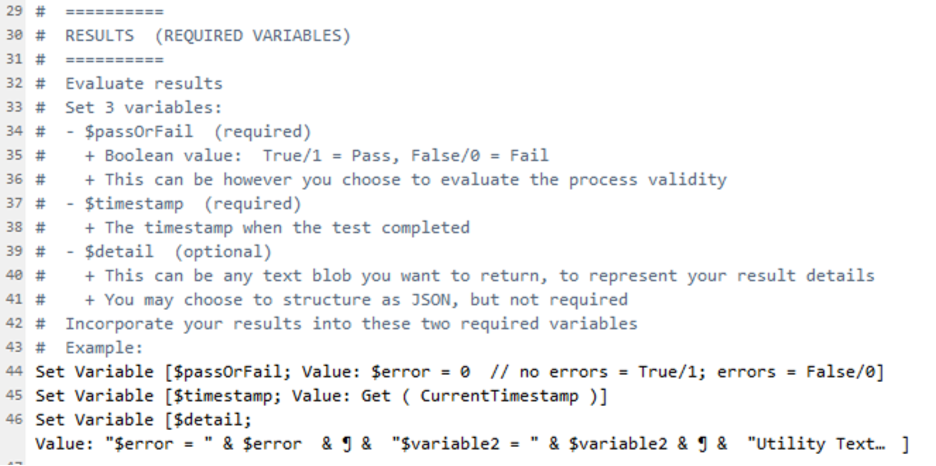

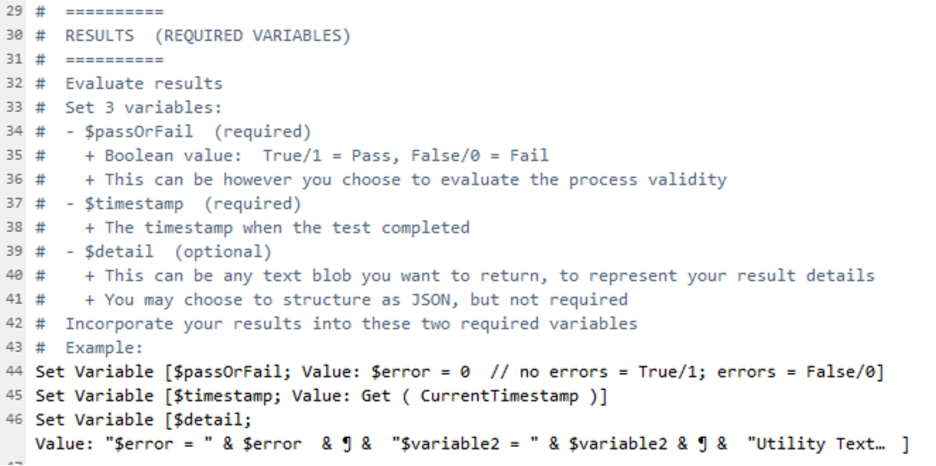

Three variables should be set near the end of the test script:

- $passOrFail (required)

– Boolean: True/1 = Pass, False/0 = Fail - $timestamp (required)

– The time when the process has been completed - $detail (optional)

– This can be any relevant data in any format to be passed back to the test framework.

The final step in each test script must be an Exit Script with those variables included in JSON format as part of the script result calculation. For simplicity, copy the Exit Script step from the sample script to all new test scripts.

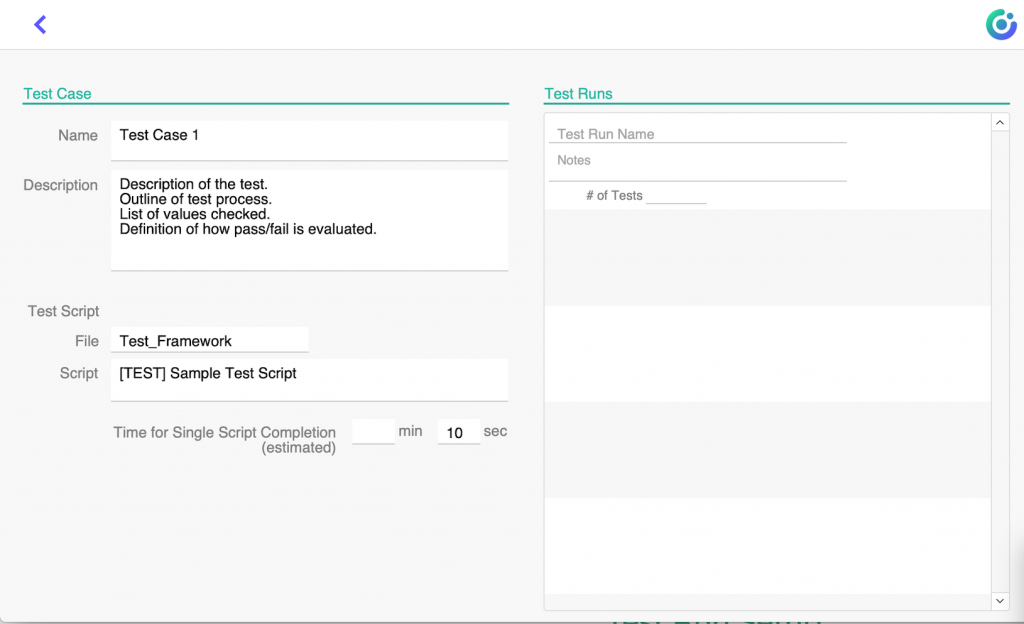

Test Case

Once the test script is in place, the developer can then create a new Test Case record in the framework file. That Test Case is then associated with the new test script by specifying the file where the test script resides and the script name. (To limit the number of scripts in the value list selection, we chose to add a prefix of “[TEST]” to our test script names and then filter the value list by that prefix.)

Finally, we added the ability to enter an estimated time for completion of a single run of the test script.

That’s it for setting up the Test Case: a testing scenario paired with a test script.

Test Run Setup

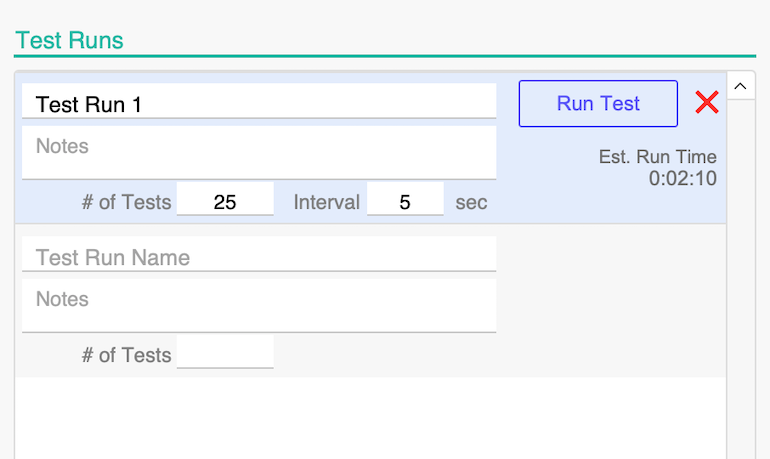

At that point, we can create our first Test Run in the portal immediately to the right.

The defining element for the Test Run is the ‘# of Tests’ to run; that is, the number of times (or instances) of the test script that should be run. When this value is more than one, there is also the option to enter an ‘Interval’ time to indicate how many seconds apart each separate instance should run.

An estimated run time appears in the Test Run portal row based on the time for single script completion, the number of tests, and the interval.

The estimated run time is not simply the number of tests multiplied by the estimated single script run time. These scripts can be queued up to run in parallel, so the estimate for the run time only takes into account the number of tests (minus one) times the interval plus the expected run time of the final instance of the test script.

Run Test

Once the Test Run has been defined, click the ‘Run Test’ button in the portal row and the test framework will queue up the specified number of ‘master test scripts’ (described below) to run on the server which, in turn, call the developer created test script. After each instance of the script is called a pause for the specified interval is inserted.

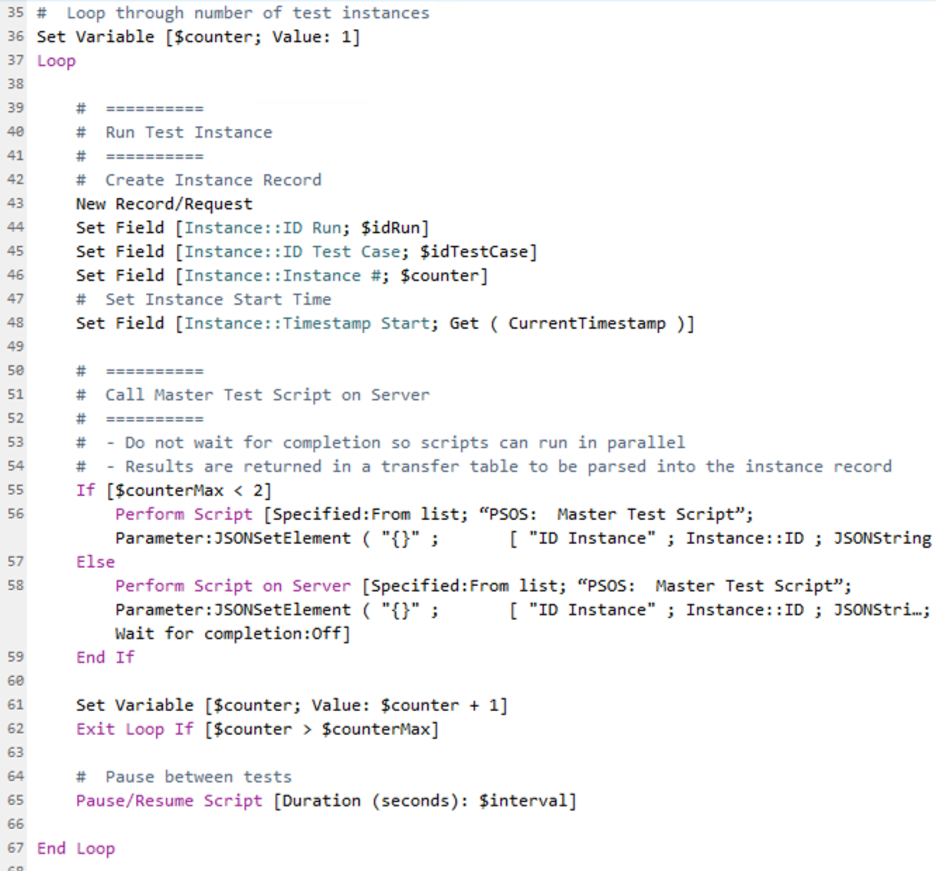

The Run Test script is built around a loop that does three things:

- Creates a new Test Instance record

- Calls the PSOS: Master Test Script

– As the name suggests, this is run on the server using Perform Script on Server.

– When multiple scripts are being queued up, “Wait for completion” is set to “Off.”

– Three parameters are sent (The Instance record’s ID, Test Script File, Test Script Name)

- Pauses for the specified interval before looping for the next instance

Note about the interval pause: We chose a basic design that incorporates the interval pause on the client-side session as it calls each instance of the test script to run on the server. There is an argument that a high number of scripts in the test run with lengthy intervals may be frustratingly slow to queue up. This may prompt a redesign in future versions.

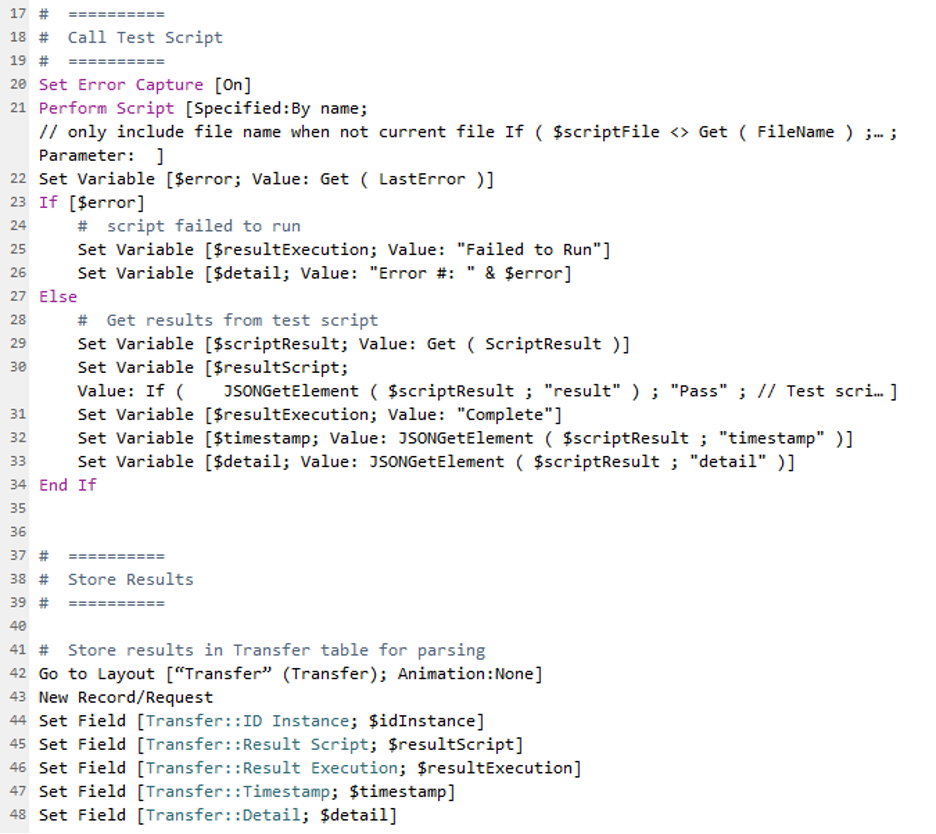

PSOS: Master Test Script

The PSOS: Master Test Script runs on the server and can run in parallel with itself any number of times, depending on the ‘# of Tests’ selected in the Test Run settings. It performs two key actions:

- Calls the developer-specified test script using Perform Script by name.

- Gathers the returned results and stores them in a transfer record.

– The record created in the Transfer table includes the Instance record’s ID so it can be paired up in the final parsing process.

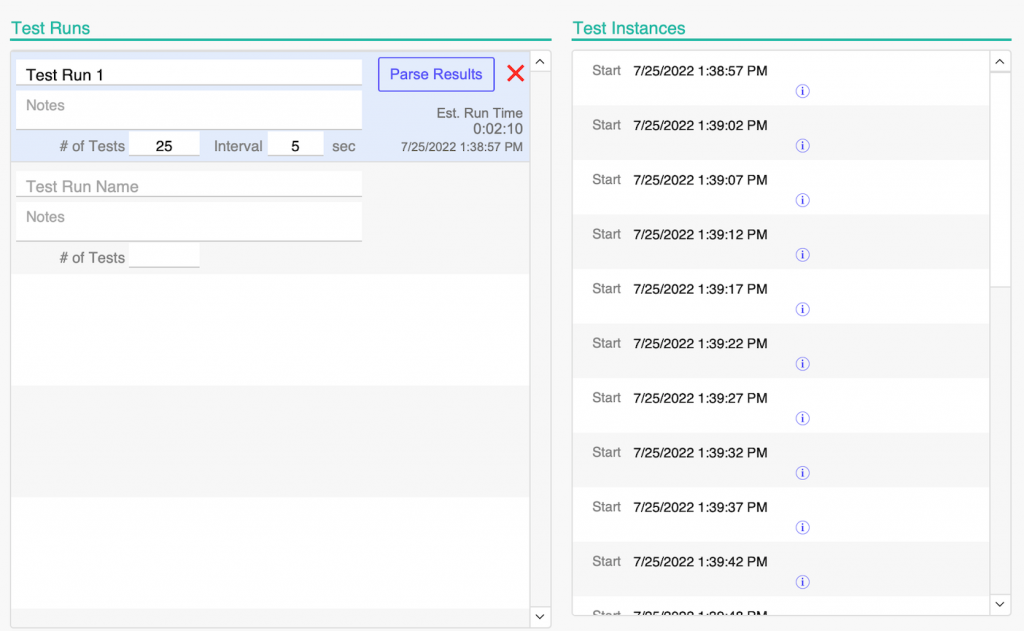

Watching the Instances

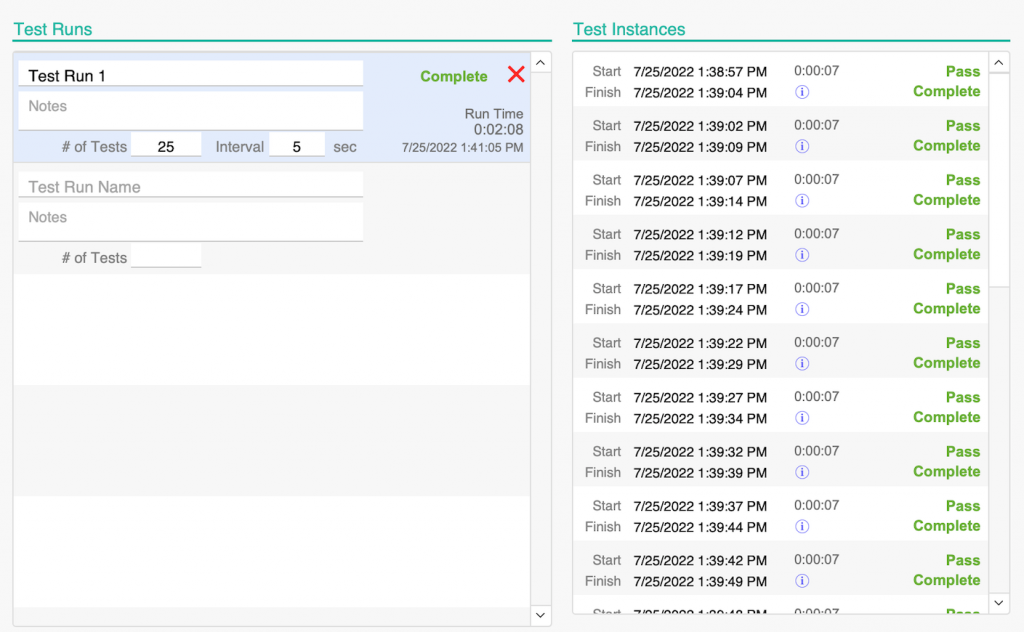

As each instance of the script is queued up, we see them noted in the Instances list. Once all are queued up, we return to the main layout of the framework. All queued Test Instances appear in the portal to the right with start times.

Parsing the Results

Once all instances have been queued up, and the last script has had time to complete, click the ‘Parse Results’ button.

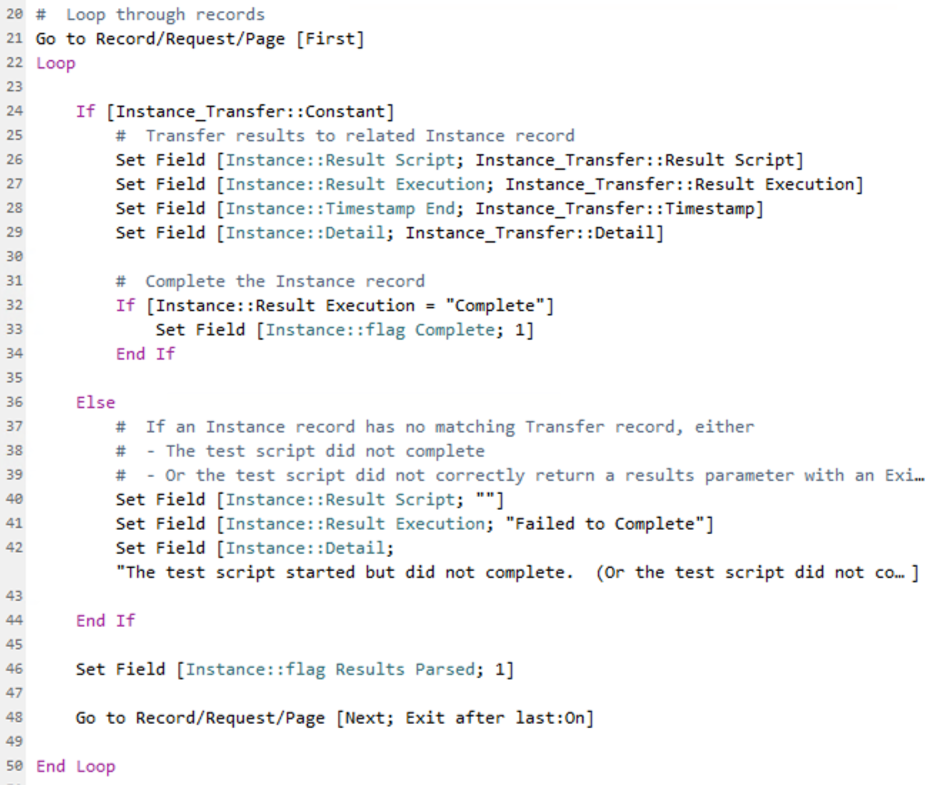

The Parse Results script loops through the related Instance records and pulls in the data from the related Transfer table records (created and populated by the PSOS: Master Test Script). If an Instance has no related Transfer record, a “Failed to Complete” result is passed back.

Viewing the Results

The results can be seen in the portal rows. Each Test Instance shows a Pass or Fail result, a completion status, and the individual instance’s run time.

The Test Run, being the parent of all associated Test Instances, gives a summary status of “Complete” or “Issues” along with the full run time for all instances.

Instance Details

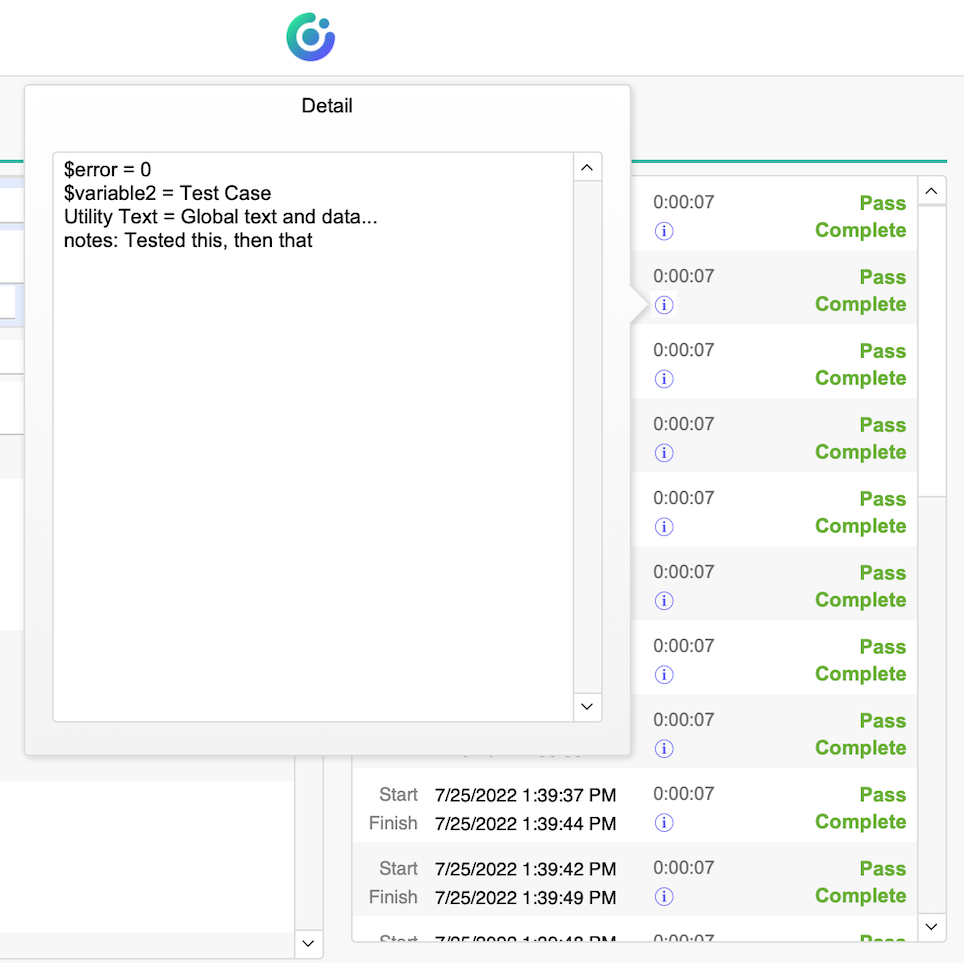

All information passed back in the test script’s $detail variable can be viewed by clicking the information popover button.

Summary

With this straightforward approach, we can define multiple tests, organize teams of testers, replicate environments with high volume usage, and review our results. The simple FileMaker-based design also allows us to quickly customize and adapt our basic tool to fit our changing test needs.

Built with you in mind

Speak to one of our expert consultants about making sense of your data today. During

this free consultation, we'll address your questions, learn more about your business, and

make some immediate recommendations.